How to transfer data: Difference between revisions

No edit summary |

|||

| (77 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

{{Message Box | {{Message Box | ||

|title=Data Transfer Nodes | |title=Data Transfer Nodes | ||

| Line 5: | Line 4: | ||

For performance and resource reasons, file transfers should be performed on the data transfer node arc-dtn.ucalgary.ca rather than on the the ARC login node. Since the ARC DTN has the same shares as ARC, any files you transfer to the DTN will also be available on ARC. | For performance and resource reasons, file transfers should be performed on the data transfer node arc-dtn.ucalgary.ca rather than on the the ARC login node. Since the ARC DTN has the same shares as ARC, any files you transfer to the DTN will also be available on ARC. | ||

}} | }} | ||

= | = Command Line File Transfer Tools = | ||

You may use the following command-line file transfer utilities on Linux, MacOS, and Windows. File transfers using these methods require your computer to be on the University of Calgary campus network or via the University of Calgary IT General VPN. | You may use the following command-line file transfer utilities on Linux, MacOS, and Windows. File transfers using these methods require your computer to be on the University of Calgary campus network or via the University of Calgary IT General VPN. | ||

If you are working on a Windows computer, you will need to install these utilities separately as they are not installed by default. Newer versions of Windows 10 (1903 and up) have '''SSH''' built-in as part of the '''openssh''' package. However, you may be better off using one of the [[#GUI File Transfer]] tools listed in the following section. | If you are working on a Windows computer, you will need to install these utilities separately as they are not installed by default. Newer versions of Windows 10 (1903 and up) have '''SSH''' built-in as part of the '''openssh''' package. However, you may be better off using one of the [[#GUI File Transfer]] tools listed in the following section. | ||

== <code>scp</code>: Secure Copy == | |||

<code>scp</code> is a secure and encrypted method of transferring files between machines via SSH. It is available on Linux and Mac computers by default and can be installed on Windows by installing the OpenSSH package. | <code>scp</code> is a secure and encrypted method of transferring files between machines via SSH. It is available on Linux and Mac computers by default and can be installed on Windows by installing the OpenSSH package. | ||

| Line 184: | Line 24: | ||

You may see all the available options with <code>scp</code> by viewing the [http://man7.org/linux/man-pages/man1/scp.1.html man page]. | You may see all the available options with <code>scp</code> by viewing the [http://man7.org/linux/man-pages/man1/scp.1.html man page]. | ||

=== Example Usage === | |||

Common operations are given below. On your desktop, to: | Common operations are given below. On your desktop, to: | ||

* Transfer a single file (eg. <code>data.dat</code>) to ARC: <syntaxhighlight lang="bash"> | * Transfer a single file (eg. <code>data.dat</code>) to ARC: <syntaxhighlight lang="bash"> | ||

| Line 196: | Line 36: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

== <code>rsync</code> == | |||

<code>rsync</code> is a utility for transferring and synchronizing files efficiently. The efficiency for its file synchronization is achieved by its delta-transfer algorithm, which reduces the amount of data sent over the network by sending only the differences between the source files and the existing files in the destination. | <code>rsync</code> is a utility for transferring and synchronizing files efficiently. The efficiency for its file synchronization is achieved by its delta-transfer algorithm, which reduces the amount of data sent over the network by sending only the differences between the source files and the existing files in the destination. | ||

<code>rsync</code> can be used to copy files and directories locally on a system or between | <code>rsync</code> can be used to copy files and directories locally on a system or between two computers via SSH. Unlike <code>scp</code>. Because it is designed to synchronize two locations, partial transfers can be restarted by re-running <code>rsync</code> without losing progress. Resuming a partial transfer is not possible with <code>scp</code>. | ||

The general format for the command is similar to '''scp''': | The general format for the command is similar to '''scp''': | ||

$ rsync [options] source destination | $ rsync [options] source destination | ||

* The <code>source</code> and <code>destination</code> fields can be a local file / directory or a remote one. | * The <code>source</code> and <code>destination</code> fields can be a local file / directory or a remote one. | ||

* <code>rsync</code> '''cannot''' transfer files between '''two remote''' locations. The source or the destination must be a local path. | |||

* The ''local location'' is a normal Unix path, absolute or relative and | * The ''local location'' is a normal Unix path, absolute or relative and | ||

* The ''remote location'' has a format <code>username@remote.system.name:file/path</code>. | * The ''remote location'' has a format <code>username@remote.system.name:file/path</code>. | ||

| Line 210: | Line 51: | ||

You may see all the available options with <code>rsync</code> by viewing the [http://man7.org/linux/man-pages/man1/rsync.1.html man page]. | You may see all the available options with <code>rsync</code> by viewing the [http://man7.org/linux/man-pages/man1/rsync.1.html man page]. | ||

=== Example Usage === | |||

Common operations are given below. On your desktop, to: | Common operations are given below. On your desktop, to: | ||

| Line 233: | Line 74: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

== <code>sftp</code>: secure file transfer protocol == | |||

* Manual page on-line: http://man7.org/linux/man-pages/man1/sftp.1.html | * Manual page on-line: http://man7.org/linux/man-pages/man1/sftp.1.html | ||

| Line 247: | Line 88: | ||

Commands are case insensitive. | Commands are case insensitive. | ||

== <code>rclone</code>: rsync for cloud storage == | |||

'''Rclone''' is a command line program to sync files and directories to and from a number of on-line storage services. | '''Rclone''' is a command line program to sync files and directories to and from a number of on-line storage services. | ||

* https://rclone.org/ | * https://rclone.org/ | ||

You may backup your data from arc-dtn to your personal 5TB UCalgary OneDrive to create a safe second copy at a distance. | |||

[https://rcs.ucalgary.ca/images/8/8e/Rclone_and_OneDrive_on_arc.pdf detailed rclone configuration instructions] | |||

Please note, if you are syncing your OneDrive with a PC or Mac, your new backup of arc home may be auto-replicated to your computer. You may choose to not replicate using the PC or Mac OneDrive client (help & settings -> settings -> account -> Choose folders) . | |||

== <code>curl</code> and <code>wget</code>: downloading from the internet == | |||

To download a file with the ability to resume a partial download: | |||

curl -c http://example.com/resource.tar.gz -O | |||

wget -c http://example.com/resource.tar.gz | |||

==== MobaXterm (Windows) | |||

= Graphical File Transfer Tools = | |||

== FileZilla == | |||

FileZilla is a free cross-platform file transfer program that can transfer files via FTP and SFTP. | |||

=== Installation === | |||

You may obtain Filezilla from the project's official website at: https://filezilla-project.org/download.php?type=client. '''Please note''': The official installer may bundle ads and unwanted software. Be careful when clicking through. | |||

Alternatively, you may obtain Filezilla from Ninite: https://ninite.com/filezilla | |||

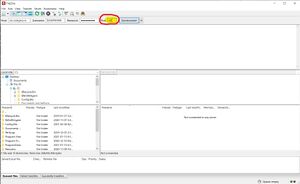

=== Connecting to ARC === | |||

If working off campus, first connect to the University of Calgary General VPN. Open Filezilla and connect to <code>arc-dtn.ucalgary.ca</code> on port <code>22</code>. | |||

[[File:Filezilla.jpg|alt=Connecting to ARC using Filezilla|none|thumb|Connecting to ARC using Filezilla]] | |||

== MobaXterm (Windows) == | |||

'''MobaXterm''' is the recommended tool for remote access and data transfer in '''Windows''' OSes. | '''MobaXterm''' is the recommended tool for remote access and data transfer in '''Windows''' OSes. | ||

| Line 268: | Line 127: | ||

* Website: https://mobaxterm.mobatek.net/ | * Website: https://mobaxterm.mobatek.net/ | ||

== WinSCP (Windows) == | |||

WinSCP is a free Windows file transfer tool. | WinSCP is a free Windows file transfer tool. | ||

https://winscp.net/eng/index.php | https://winscp.net/eng/index.php | ||

= Globus File Transfer = | |||

Globus File Transfer is a cloud based service for file transfer and file sharing. It uses GridFTP technology for high speed and reliable data transfers<br> | |||

* Globus Docs: https://docs.globus.org/ | |||

* The Alliance Docs on Globus: https://docs.alliancecan.ca/wiki/Globus | |||

* Globus How-Tos: https://docs.globus.org/how-to/ | |||

== How to get started == | |||

'''Free File Transfer''': If you work at a non-profit research institution, such as the University of Calgary, | |||

you can use Globus for unlimited transfers between Globus Connect Server endpoints (data sources) | |||

and between a server and personal endpoint. | |||

The RCS group at the University of Calgary has a '''paid subscription for Globus services''', so called '''Globus Plus''', | |||

currently at the '''High Assurance''' level ([https://www.globus.org/subscriptions Globus Subscription Levels]). | |||

You '''do NOT need''' to have to be added to the subscription service, if you only want to '''use Globus to''' | |||

* '''Transfer your own files''' to and from the '''ARC cluster'''. | |||

* '''Transfer files''' your have access on the '''Digital Alliance of Canada clusters'''. | |||

* '''Transfer files''' from your own computer using your '''personal Globus endpoint'''. | |||

* '''Share data''' from the '''ARC cluster'''. | |||

* '''Share data''' from the '''Alliance clusters'''. | |||

You do '''need to be added to the Globus Plus''' if you want to | |||

* '''Share data''' with other researchers using your '''personal Globus end point'''. | |||

To get your account '''added to the Globus Plus service''', please | |||

# Email support@hpc.ucalgary.ca to request that your ucalgary account be added to the campus Globus Plus subscription. | |||

# Check your email client for a 'Welcome to Globus' message with current information to federate your @ucalgary identity with Globus services. | |||

== Use Globus Web Application to transfer files == | |||

* To initiate data transfers using the Globus Web Application, navigate to https://app.globus.org and log into with your UCalgary account. | |||

: If you have your own '''personal GlobusID''' you can login here: https://globusid.org/ . | |||

* On the left panel, click on '''File Manager''' to define/select the collection you wish to use. For example, to transfer data from ARC cluster (collection 1) to Compute Canada cedar cluster (collection 2) | |||

* Under collection, for ARC data transfer node search for '''arc-dtn-collection'''. You will see it is listed with the following description: "Mapped Collection (GCS) on UCalgary ARC-DTN endpoint " | |||

* from the drop down menu. Authenticate your access using UCalgary IT credentials. This will bring you to the home directory on ARC. | |||

* Next, for Compute Canada cedar data transfer node choose 'collection 2' as '''computecanada#cedar-dtn''' from the drop down menu. | |||

: Again authenticate your access using Compute Canada credentials. Navigate to the location where you want to transfer the file. | |||

* Select the file to be transferred from collection 1' and initiate the transfer process. | |||

== Use Globus Web Application to Share Files == | |||

You may grant access to a folder in your allocation on ARC to be used for uploading or sharing files with '''any individual with a globus account''', either through ComputeCanada or their own institution. | |||

Please see https://docs.globus.org/how-to/share-files/ | |||

=== How it works === | |||

'''Note''', that Globus sharing allows sharing data located on the ARC cluster with other people who '''do not possess an ARC account'''. | |||

For the '''external collaborator''' the required steps are: | |||

* The individual who needs access to the data on ARC can get a GlobusID individual account at https://globus.org . | |||

: It will be in the form of <code>globusid_name@globusid.org</code> | |||

* If the individual already has access to '''Globus via another institution''', then that identity can be used. No need in personal Globus ID. | |||

* Even if the collaborator does '''not have any affiliation with Globus''', he/she will be able to authenticate themselves with their '''Google''' (or some others) identity. | |||

For the '''data owner on ARC''': | |||

* Login to https://globus.org using UofC account.. | |||

* Create a '''shared collection''' from a data directory on ARC. | |||

* Add the <code>globusid_name@globusid.org</code> to the access list to the shared collection, with either '''read-only''' or '''read-write''' permissions. | |||

: After that the collaborator will be able to access the shared collection using the individual '''GlobusID''' account. | |||

* The '''link to the shared data will be emailed to the collaborator''' by Globus, based on the email address you indicated in the form. | |||

= Transferring Large Datasets = | |||

=== Using screen and rsync === | |||

If you want to transfer a large amount of data from a remote Unix system to ARC you can use ''<code>rsync</code>'' to handle the transfer. However, you will have to keep your SSH session from your workstation connected during the entire transfer which is often not convenient or not feasible. | |||

For large transfers, please use the <code>arc-dtn.ucalgary.ca</code> data transfer login node instead. | |||

To overcome this one can run the '''rsync''' transfer inside a '''screen''' virtual session on ARC / ARC-DTN. '''screen''' creates a terminal session local to ARC and allows for re-connection from SSH sessions from your workstation. | |||

To begin, login to ARC-DTN and start <code>screen</code> with the screen command: | |||

<source lang=bash> | |||

# Login to ARC-DTN | |||

$ ssh username@arc-dtn.ucalgary.ca | |||

# Start a screen session | |||

$ screen | |||

# While in the new screen session, start the transfer with rsync. | |||

$ rsync -axv ext_user@external.system:path/to/remote/data . | |||

# Disconnect from the screen session with the hotkey 'Ctrl-a d' | |||

# You may now disconnect from ARC or close the lid of you laptop or turn off the computer. | |||

</source> | |||

To check if the transfer has been finished. | |||

<source lang=bash> | |||

# Login to ARC-DTN | |||

$ ssh username@arc-dtn.ucalgary.ca | |||

# Reconnect to the screen session | |||

$ screen -r | |||

# If the transfer has been finished close the screen session. | |||

$ exit | |||

# If the transfer is still running, disconnect from the screen session with the hotkey 'Ctrl-a d' | |||

</source> | |||

=== Very large files === | |||

If the files are large and the transfer speed is low the transfer may fail before the file has been transferred. | |||

'''rsync''' may not be of help here, as it will not restart the file transfer (have not tested recently). | |||

The solution may be to split the large file into smaller chunks, transfer them using rsync and then join them on the remote system (ARC for example): | |||

<source lang=bash> | |||

# Large file is 506MB in this example. | |||

$ ls -l t.bin | |||

-rw-r--r-- 1 username username 530308481 Jun 8 11:06 t.bin | |||

# split the file: | |||

$ split -b 100M t.bin t.bin_chunk. | |||

# Check the chunks. | |||

$ ls -l t.bin_chunk.* | |||

-rw-r--r-- 1 username username 104857600 Jun 8 11:09 t.bin_chunk.aa | |||

-rw-r--r-- 1 username username 104857600 Jun 8 11:09 t.bin_chunk.ab | |||

-rw-r--r-- 1 username username 104857600 Jun 8 11:09 t.bin_chunk.ac | |||

-rw-r--r-- 1 username username 104857600 Jun 8 11:09 t.bin_chunk.ad | |||

-rw-r--r-- 1 username username 104857600 Jun 8 11:09 t.bin_chunk.ae | |||

-rw-r--r-- 1 username username 6020481 Jun 8 11:09 t.bin_chunk.af | |||

# Transfer the files: | |||

$ rsync -axv t.bin_chunks.* username@arc-dtn.ucalgary.ca: | |||

</source> | |||

Then login to ARC and join the files: | |||

<source lang=bash> | |||

$ cat t.bin_chunk.* > t.bin | |||

$ ls -l | |||

-rw-r--r-- 1 username username 530308481 Jun 8 11:06 t.bin | |||

</source> | |||

Success. | |||

[[Category:Guides]] | [[Category:Guides]] | ||

[[Category:How-Tos]] | |||

{{Navbox Guides}} | |||

Latest revision as of 21:01, 10 April 2024

|

Data Transfer Nodes For performance and resource reasons, file transfers should be performed on the data transfer node arc-dtn.ucalgary.ca rather than on the the ARC login node. Since the ARC DTN has the same shares as ARC, any files you transfer to the DTN will also be available on ARC.

|

Command Line File Transfer Tools

You may use the following command-line file transfer utilities on Linux, MacOS, and Windows. File transfers using these methods require your computer to be on the University of Calgary campus network or via the University of Calgary IT General VPN.

If you are working on a Windows computer, you will need to install these utilities separately as they are not installed by default. Newer versions of Windows 10 (1903 and up) have SSH built-in as part of the openssh package. However, you may be better off using one of the #GUI File Transfer tools listed in the following section.

scp: Secure Copy

scp is a secure and encrypted method of transferring files between machines via SSH. It is available on Linux and Mac computers by default and can be installed on Windows by installing the OpenSSH package.

The general format for the command is:

$ scp [options] source destination

- The

sourceanddestinationfields can be a local file / directory or a remote one. - The local location is a normal Unix path, absolute or relative and

- The remote location has a format

username@remote.system.name:file/path. - The remote relative file path is relative to the home directory of the

usernameon the remote system.

You may see all the available options with scp by viewing the man page.

Example Usage

Common operations are given below. On your desktop, to:

- Transfer a single file (eg.

data.dat) to ARC:desktop$ scp data.dat username@arc-dtn.ucalgary.ca:/desired/destination

- Transfer all files ending with

.datto ARC:desktop$ scp *.dat username@arc-dtn.ucalgary.ca:/desired/destination

- To transfer an entire directory to ARC:

desktop$ scp -r my_data_directory/ username@arc-dtn.ucalgary.ca:/desired/destination

rsync

rsync is a utility for transferring and synchronizing files efficiently. The efficiency for its file synchronization is achieved by its delta-transfer algorithm, which reduces the amount of data sent over the network by sending only the differences between the source files and the existing files in the destination.

rsync can be used to copy files and directories locally on a system or between two computers via SSH. Unlike scp. Because it is designed to synchronize two locations, partial transfers can be restarted by re-running rsync without losing progress. Resuming a partial transfer is not possible with scp.

The general format for the command is similar to scp:

$ rsync [options] source destination

- The

sourceanddestinationfields can be a local file / directory or a remote one. rsynccannot transfer files between two remote locations. The source or the destination must be a local path.- The local location is a normal Unix path, absolute or relative and

- The remote location has a format

username@remote.system.name:file/path. - The remote relative file path is relative to the home directory of the

usernameon the remote system.

You may see all the available options with rsync by viewing the man page.

Example Usage

Common operations are given below. On your desktop, to:

- Upload a single file (eg.

data.dat) from your workstation to your ARC:desktop$ rsync -v data.dat username@arc-dtn.ucalgary.ca:/desired/destination

- Upload all files matching a wildcard (eg. ending in

*.dat):$ rsync -v *.dat username@arc-dtn.ucalgary.ca:/desired/destination

- Upload an entire directory (eg.

my_datato~/projects/project2):$ rsync -axv my_data username@arc-dtn.ucalgary.ca:~projects/project2/

- Upload more than one directory:

desktop$ rsync -axv my_data1 my_data2 my_data3 username@arc-dtn.ucalgary.ca:/desired/destination

- Download one file (eg.

output.dat) from ARC to the current directory on your workstation:## Note the '.' at the end of the command which references the current working directory on your computer desktop$ rsync -v username@arc-dtn.ucalgary.ca:projects/project1/output.dat . - Download one directory (eg.

outputs) from ARC to the current directory on your workstation:desktop$ rsync -axv username@arc-dtn.ucalgary.ca:projects/project1/outputs .

sftp: secure file transfer protocol

- Manual page on-line: http://man7.org/linux/man-pages/man1/sftp.1.html

sftp is a file transfer program, similar to ftp,

which performs all operations over an encrypted ssh transport.

It may also use many features of ssh, such as public key authentication and compression.

sftp has an interactive mode,

in which sftp understands a set of commands similar to those of ftp.

Commands are case insensitive.

rclone: rsync for cloud storage

Rclone is a command line program to sync files and directories to and from a number of on-line storage services.

You may backup your data from arc-dtn to your personal 5TB UCalgary OneDrive to create a safe second copy at a distance.

detailed rclone configuration instructions

Please note, if you are syncing your OneDrive with a PC or Mac, your new backup of arc home may be auto-replicated to your computer. You may choose to not replicate using the PC or Mac OneDrive client (help & settings -> settings -> account -> Choose folders) .

curl and wget: downloading from the internet

To download a file with the ability to resume a partial download:

curl -c http://example.com/resource.tar.gz -O wget -c http://example.com/resource.tar.gz

Graphical File Transfer Tools

FileZilla

FileZilla is a free cross-platform file transfer program that can transfer files via FTP and SFTP.

Installation

You may obtain Filezilla from the project's official website at: https://filezilla-project.org/download.php?type=client. Please note: The official installer may bundle ads and unwanted software. Be careful when clicking through.

Alternatively, you may obtain Filezilla from Ninite: https://ninite.com/filezilla

Connecting to ARC

If working off campus, first connect to the University of Calgary General VPN. Open Filezilla and connect to arc-dtn.ucalgary.ca on port 22.

MobaXterm (Windows)

MobaXterm is the recommended tool for remote access and data transfer in Windows OSes.

MobaXterm is a one-stop solution for most remote access work on a compute cluster or a Unix / Linux server.

It provides many Unix like utilities for Windows including an SSH client and X11 graphics server. It provides a graphical interface for data transfer operations.

- Website: https://mobaxterm.mobatek.net/

WinSCP (Windows)

WinSCP is a free Windows file transfer tool.

https://winscp.net/eng/index.php

Globus File Transfer

Globus File Transfer is a cloud based service for file transfer and file sharing. It uses GridFTP technology for high speed and reliable data transfers

- Globus Docs: https://docs.globus.org/

- The Alliance Docs on Globus: https://docs.alliancecan.ca/wiki/Globus

- Globus How-Tos: https://docs.globus.org/how-to/

How to get started

Free File Transfer: If you work at a non-profit research institution, such as the University of Calgary, you can use Globus for unlimited transfers between Globus Connect Server endpoints (data sources) and between a server and personal endpoint.

The RCS group at the University of Calgary has a paid subscription for Globus services, so called Globus Plus, currently at the High Assurance level (Globus Subscription Levels).

You do NOT need to have to be added to the subscription service, if you only want to use Globus to

- Transfer your own files to and from the ARC cluster.

- Transfer files your have access on the Digital Alliance of Canada clusters.

- Transfer files from your own computer using your personal Globus endpoint.

- Share data from the ARC cluster.

- Share data from the Alliance clusters.

You do need to be added to the Globus Plus if you want to

- Share data with other researchers using your personal Globus end point.

To get your account added to the Globus Plus service, please

- Email support@hpc.ucalgary.ca to request that your ucalgary account be added to the campus Globus Plus subscription.

- Check your email client for a 'Welcome to Globus' message with current information to federate your @ucalgary identity with Globus services.

Use Globus Web Application to transfer files

- To initiate data transfers using the Globus Web Application, navigate to https://app.globus.org and log into with your UCalgary account.

- If you have your own personal GlobusID you can login here: https://globusid.org/ .

- On the left panel, click on File Manager to define/select the collection you wish to use. For example, to transfer data from ARC cluster (collection 1) to Compute Canada cedar cluster (collection 2)

- Under collection, for ARC data transfer node search for arc-dtn-collection. You will see it is listed with the following description: "Mapped Collection (GCS) on UCalgary ARC-DTN endpoint "

- from the drop down menu. Authenticate your access using UCalgary IT credentials. This will bring you to the home directory on ARC.

- Next, for Compute Canada cedar data transfer node choose 'collection 2' as computecanada#cedar-dtn from the drop down menu.

- Again authenticate your access using Compute Canada credentials. Navigate to the location where you want to transfer the file.

- Select the file to be transferred from collection 1' and initiate the transfer process.

You may grant access to a folder in your allocation on ARC to be used for uploading or sharing files with any individual with a globus account, either through ComputeCanada or their own institution.

Please see https://docs.globus.org/how-to/share-files/

How it works

Note, that Globus sharing allows sharing data located on the ARC cluster with other people who do not possess an ARC account.

For the external collaborator the required steps are:

- The individual who needs access to the data on ARC can get a GlobusID individual account at https://globus.org .

- It will be in the form of

globusid_name@globusid.org

- If the individual already has access to Globus via another institution, then that identity can be used. No need in personal Globus ID.

- Even if the collaborator does not have any affiliation with Globus, he/she will be able to authenticate themselves with their Google (or some others) identity.

For the data owner on ARC:

- Login to https://globus.org using UofC account..

- Create a shared collection from a data directory on ARC.

- Add the

globusid_name@globusid.orgto the access list to the shared collection, with either read-only or read-write permissions.

- After that the collaborator will be able to access the shared collection using the individual GlobusID account.

- The link to the shared data will be emailed to the collaborator by Globus, based on the email address you indicated in the form.

Transferring Large Datasets

Using screen and rsync

If you want to transfer a large amount of data from a remote Unix system to ARC you can use rsync to handle the transfer. However, you will have to keep your SSH session from your workstation connected during the entire transfer which is often not convenient or not feasible.

For large transfers, please use the arc-dtn.ucalgary.ca data transfer login node instead.

To overcome this one can run the rsync transfer inside a screen virtual session on ARC / ARC-DTN. screen creates a terminal session local to ARC and allows for re-connection from SSH sessions from your workstation.

To begin, login to ARC-DTN and start screen with the screen command:

# Login to ARC-DTN

$ ssh username@arc-dtn.ucalgary.ca

# Start a screen session

$ screen

# While in the new screen session, start the transfer with rsync.

$ rsync -axv ext_user@external.system:path/to/remote/data .

# Disconnect from the screen session with the hotkey 'Ctrl-a d'

# You may now disconnect from ARC or close the lid of you laptop or turn off the computer.

To check if the transfer has been finished.

# Login to ARC-DTN

$ ssh username@arc-dtn.ucalgary.ca

# Reconnect to the screen session

$ screen -r

# If the transfer has been finished close the screen session.

$ exit

# If the transfer is still running, disconnect from the screen session with the hotkey 'Ctrl-a d'

Very large files

If the files are large and the transfer speed is low the transfer may fail before the file has been transferred. rsync may not be of help here, as it will not restart the file transfer (have not tested recently).

The solution may be to split the large file into smaller chunks, transfer them using rsync and then join them on the remote system (ARC for example):

# Large file is 506MB in this example.

$ ls -l t.bin

-rw-r--r-- 1 username username 530308481 Jun 8 11:06 t.bin

# split the file:

$ split -b 100M t.bin t.bin_chunk.

# Check the chunks.

$ ls -l t.bin_chunk.*

-rw-r--r-- 1 username username 104857600 Jun 8 11:09 t.bin_chunk.aa

-rw-r--r-- 1 username username 104857600 Jun 8 11:09 t.bin_chunk.ab

-rw-r--r-- 1 username username 104857600 Jun 8 11:09 t.bin_chunk.ac

-rw-r--r-- 1 username username 104857600 Jun 8 11:09 t.bin_chunk.ad

-rw-r--r-- 1 username username 104857600 Jun 8 11:09 t.bin_chunk.ae

-rw-r--r-- 1 username username 6020481 Jun 8 11:09 t.bin_chunk.af

# Transfer the files:

$ rsync -axv t.bin_chunks.* username@arc-dtn.ucalgary.ca:

Then login to ARC and join the files:

$ cat t.bin_chunk.* > t.bin

$ ls -l

-rw-r--r-- 1 username username 530308481 Jun 8 11:06 t.bin

Success.