Apache Spark on Marc: Difference between revisions

No edit summary |

m (Added navbox) |

||

| (3 intermediate revisions by one other user not shown) | |||

| Line 41: | Line 41: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

* This message says that the Jupyter server is running on node sg5 on port | * This message says that the Jupyter server is running on node sg5 on port 13452. Port 13452 needs to be forwarded from the server running Putty to the cluster. | ||

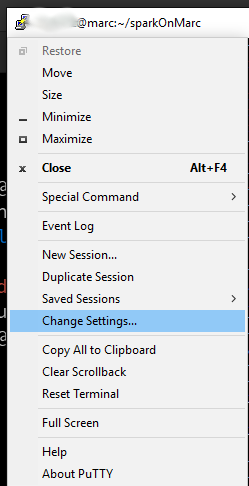

* Click the Putty icon in the upper left corner of the Putty window and select "Change Settings" | * Click the Putty icon in the upper left corner of the Putty window and select "Change Settings" | ||

[[File:ChangeSettings.png|alt=Image showing where the "Change Settings" option for Putty is located|none|thumb|Image showing where the "Change Settings" option for Putty is located]] | [[File:ChangeSettings.png|alt=Image showing where the "Change Settings" option for Putty is located|none|thumb|Image showing where the "Change Settings" option for Putty is located]] | ||

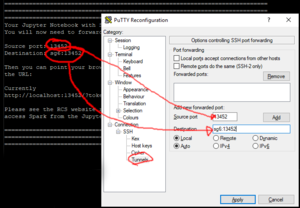

* If there's already | * If there's already 13452 listed in the Forwarded ports box click it and click Remove -- the Forwarded ports box should be empty. | ||

* In the Change Settings dialogue box pick "Tunnels" in the left hand box (you'll have to open the Various levels of the tree to get there) | * In the Change Settings dialogue box pick "Tunnels" in the left hand box (you'll have to open the Various levels of the tree to get there) | ||

* | * Copy the circled source port "13452" into the Source port box in Putty | ||

* | * Copy the circled value from the putty window into the Destination box | ||

* The "Forwarded ports" box should now contain a concatenation of the text in the source port and destination boxes. i.e. " | [[File:SettingsDialogueBox.png|alt=Putty Settings dialogue box showing the required port forwarding.|none|thumb|Putty Settings dialogue box showing the required port forwarding.]] | ||

* Click the Add button. (Forgetting to do this is the biggest cause of problems) | |||

* The "Forwarded ports" box should now contain a concatenation of the text in the source port and destination boxes. i.e. "13452:sg4:13452" | |||

* Click Apply | * Click Apply | ||

* Return to the myappmf tab in your browser and start a Google Chrome there. '''Note:''' It is important that you use the Chrome from myappmf not your desktop. | * Return to the myappmf tab in your browser and start a Google Chrome there. '''Note:''' It is important that you use the Chrome from myappmf not your desktop. | ||

* Paste the link containing http://localhost: | * Paste the link containing http://localhost:...?token= found in the putty terminal window into the browser started from myappmf and you should be rewarded with a Jupyter Notebook session. | ||

==Troubleshooting Forwarding== | ==Troubleshooting Forwarding== | ||

* There are a number of things that can go wrong with the port forwarding above. | * There are a number of things that can go wrong with the port forwarding above. | ||

'''Problem:''' The job running the Jupyter notebook and Spark cluster can run out of time in which case you'll have to start a new Jupyter/Spark job.<BR> | '''Problem:''' The job running the Jupyter notebook and Spark cluster can run out of time in which case you'll have to start a new Jupyter/Spark job.<BR> | ||

'''Solution:''' Start a new on and follow the solution to the next problem to "clean up"<BR> | '''Solution:''' Start a new on and follow the solution to the next problem to "clean up"<BR> | ||

In your Python file or terminal load the appropriate python modules and instantiate the cluster: | In your Python file or terminal load the appropriate python modules and instantiate the cluster: | ||

| Line 91: | Line 87: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

You now have a sc (Spark Context) and sqlCtx (SQL Context) objects to operate on. Please remember to return to the | You now have a sc (Spark Context) and sqlCtx (SQL Context) objects to operate on. Please remember to return to the Putty window screen and terminate the Jupyter + Spark job when you are finished or the resources will stay allocated to you until the timelimit runs out which will prevent others from accessing the limited resources on Marc. | ||

There are many Spark tutorials out there. Here are some good places to look: | There are many Spark tutorials out there. Here are some good places to look: | ||

| Line 103: | Line 99: | ||

[[Category:Marc]] | [[Category:Marc]] | ||

[[Category:Software]] | [[Category:Software]] | ||

{{Navbox Software}} | |||

Latest revision as of 22:26, 20 September 2023

Overview

This guide gives an overview of running Apache Spark clusters under the existing scheduling system of the Level 3/4 Rated Marc cluster at the University of Calgary.

Due to certain mandatory security restrictions required when accessing Level 3 and 4 data, we are not able to provide access to Sparc via Open On Demand as it is done on Arc. There is a slightly more complicated procedure to access Spark and the associated Jupyter notebook but it is only slightly more difficult.

Connecting

- Login to Marc normally as described on [MARC_accounts]

- Start the Jupyter notebook and Spark cluster as a job with something like the following (changing the number of cpus and memory to fit your requirements:

sbatch -N1 -n 38 --cpus-per-task=1 --mem=100G --time=1:00:00 /global/software/spark/sparkOnMarc/sparkOnMarc.sh

Submitted batch job 14790

- The above command will return a job number "14790" in this example. A file named slurm-<jobnumber>.out will be created in the current directory. After a few moments the file will contain the connection information required for you to access a Jupyter notebook that has access to the Spark cluster that was just started.

$ cat slurm-14790.out

<snip -- lots of logging messages that can be ignored unless there's problems>

Discovered Jupyter Notebook server listening on port 13452!

===========================================================================

===========================================================================

===========================================================================

Your Jupyter Notebook with Spark cluster is now active. Inside Putty

You will now need to forward:

Source port: 13452

Destination: sg6:13452

Then you can point your browser started from myappmf.ucalgary.ca to

the URL:

Currently

http://localhost:13452/?token=6b1bc28386204207f67d6ae9d3fb97de455fa105b9b8e242

Please see the RCS website rcs.ucalgary.ca for information on how to

access Spark from the Jupyter notebook.

===========================================================================

===========================================================================

===========================================================================

- This message says that the Jupyter server is running on node sg5 on port 13452. Port 13452 needs to be forwarded from the server running Putty to the cluster.

- Click the Putty icon in the upper left corner of the Putty window and select "Change Settings"

- If there's already 13452 listed in the Forwarded ports box click it and click Remove -- the Forwarded ports box should be empty.

- In the Change Settings dialogue box pick "Tunnels" in the left hand box (you'll have to open the Various levels of the tree to get there)

- Copy the circled source port "13452" into the Source port box in Putty

- Copy the circled value from the putty window into the Destination box

- Click the Add button. (Forgetting to do this is the biggest cause of problems)

- The "Forwarded ports" box should now contain a concatenation of the text in the source port and destination boxes. i.e. "13452:sg4:13452"

- Click Apply

- Return to the myappmf tab in your browser and start a Google Chrome there. Note: It is important that you use the Chrome from myappmf not your desktop.

- Paste the link containing http://localhost:...?token= found in the putty terminal window into the browser started from myappmf and you should be rewarded with a Jupyter Notebook session.

Troubleshooting Forwarding

- There are a number of things that can go wrong with the port forwarding above.

Problem: The job running the Jupyter notebook and Spark cluster can run out of time in which case you'll have to start a new Jupyter/Spark job.

Solution: Start a new on and follow the solution to the next problem to "clean up"

In your Python file or terminal load the appropriate python modules and instantiate the cluster:

import os

import atexit

import sys

import re

import pyspark

from pyspark.conf import SparkConf

from pyspark.context import SparkContext

from pyspark.sql import SQLContext

conflines=[tuple(a.rstrip().split(" ")) for a in open(os.environ['SPARK_CONFIG_FILE']).readlines()]

conf=SparkConf()

conf.setAll(conflines)

conf.setMaster("spark://%s:%s"% (os.environ['SPARK_MASTER_HOST'],os.environ['SPARK_MASTER_PORT']))

sc=pyspark.SparkContext(conf=conf)

#You need this line if you want to use SparkSQL

sqlCtx=SQLContext(sc)

#YOUR CODE GOES HERE

You now have a sc (Spark Context) and sqlCtx (SQL Context) objects to operate on. Please remember to return to the Putty window screen and terminate the Jupyter + Spark job when you are finished or the resources will stay allocated to you until the timelimit runs out which will prevent others from accessing the limited resources on Marc.

There are many Spark tutorials out there. Here are some good places to look:

- https://spark.apache.org/docs/latest/quick-start.html

- https://spark.apache.org/docs/latest/rdd-programming-guide.html

- https://spark.apache.org/docs/latest/sql-programming-guide.html

HINT: It helps to google "pyspark" as that returns Python results instead of Scala which is another common language used to interact with Spark.

| ||||||