Bioinformatics applications: Difference between revisions

| Line 52: | Line 52: | ||

Save the above script in a file called ‘fastqc.sh’ and submit it to SLURM using a sbatch command as below. | Save the above script in a file called ‘fastqc.sh’ and submit it to SLURM using a sbatch command as below. | ||

[tannistha.nandi@arc ~]$ sbatch fastqc.sh | |||

Submitted batch job 9658868 | |||

The runtime of job id 9658868 is ~46 minutes on a single core with a memory utilization of ~286 MB of RAM. | |||

{| class="wikitable" | |||

|+ Job efficiency | |||

|- | |||

! Job name !! Header text | |||

|- | |||

| # files || 1 | |||

|- | |||

| File size || 33GB | |||

|- | |||

| # cores || 1 | |||

|- | |||

| CPU efficiency || 99.28% | |||

|- | |||

| Run time || 00:46:13 | |||

|- | |||

| Memory utilized|| 285.46 MB | |||

|} | |||

Revision as of 05:59, 8 June 2021

Strategies to write efficient bioinformatics workflows for the ARC high performance computing (HPC) cluster

One of the challenges to deal with big genomics data set is their long runtimes. The effective and efficient use of the computing resources on the Advanced Research Computing (ARC) cluster can speed up the runtimes.

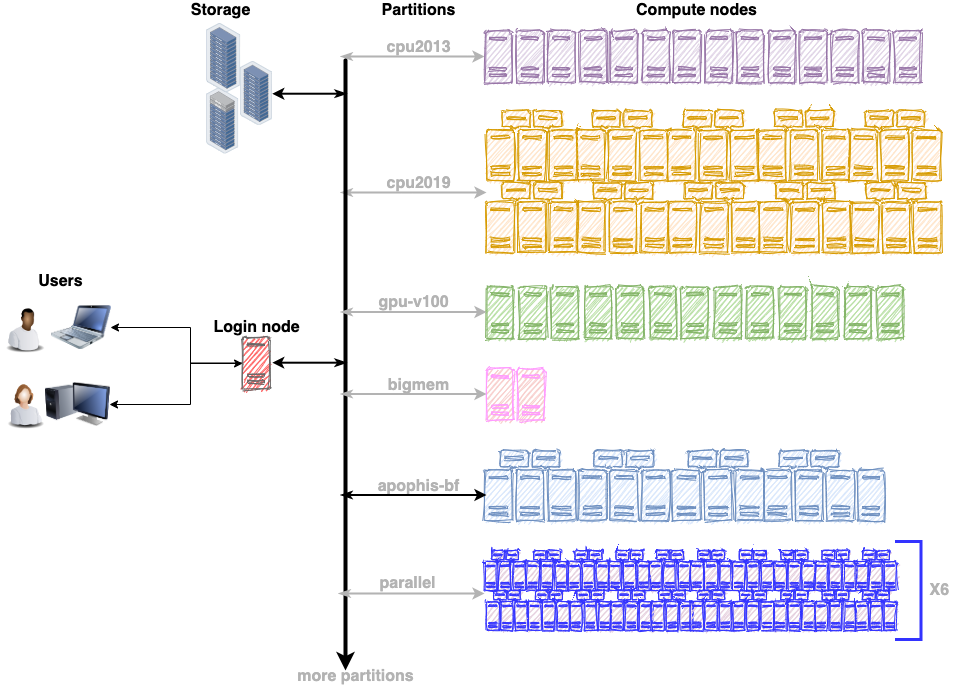

The ARC cluster offers a variety of hardware gears to suit the requirements of any workflow. As a first step, review the compute resources on the ARC cluster (weblink). It will facilitate the process of choosing the appropriate partition depending on the nature of the workflow. For example, choose the gpu-v100 partition for GPU accelerators or the bigmem partition for a memory intensive workflow. Each partition has multiple compute nodes with similar hardware specifications. The illustration below shows that gpu-v100 partition has 13 compute nodes, cpu2019 partition has 40 compute nodes, cpu2013 has 14 compute nodes and so on.

This section will review the SLURM job submission scripts for the bioinformatics applications below.

- FastQC - A high throughput sequence QC analysis tool

- Burrows-Wheeler Aligner (BWA)

- Samtools

FastQC

FastQC assess the quality of your sequencing data. It is available as a module on the ARC cluster. To run the installed version of fastqc, load the biobuilds/2017.11 module as below:

[tannistha.nandi@arc ~]$ module load biobuilds/2017.11

Loading biobuilds/2017.11

Loading requirement: java/1.8.0 biobuilds/conda

[tannistha.nandi@arc ~]$ fastqc --version

FastQC v0.11.5

[tannistha.nandi@arc ~]$ fastqc --help

FastQC - A high throughput sequence QC analysis tool

SYNOPSIS

fastqc seqfile1 seqfile2 .. seqfileN

fastqc [-o output dir] [--(no)extract] [-f fastq|bam|sam] [-c contaminant file] seqfile1..seqfileN

In this example, I will work with the following 33GB of genome sequencing data in fastq file format.

[tannistha.nandi@arc ~]$ ls -l -rw-rw-r-- 1 tannistha.nandi tannistha.nandi 33GB Jan 24. 2018 SN4570284.fq.gz

I will submit the fastqc job on a backfill partition called apophis-bf where each compute node has 40 cores with 185GB of RAM. The job script below requests for 1 core (--cpus-per-task) for 1 hour, along with 300 MB of RAM on the apophis-bf partition. The RAM estimate of 300MB is based on the fastqc user guide that states each thread will be allocated 250MB of memory. On the ARC cluster, the CPUs and cores refer to the same thing. When the job scheduler SLURM will allocate these resources on a compute node, the job will run the first command to load the biobuilds/2017.11 module, which will set the path to fastqc. The next line will launch and run the fastqc application on the data file.

#!/bin/bash #<------------------------Request for Resources-----------------------> #SBATCH –job-name=fastqc-S #SBATCH --mem=300M #SBATCH –-nodes=1 #SBATCH --ntasks=1 #SBATCH –-cpus-per-task=1 #SBATCH --time= 01:00:00 #SBATCH –-partition=apophis-bf #<------------------------Set environment variables-------------------> module load biobuilds/2017.11 #<------------------------Run python script---------------------------> fastqc -o output --noextract -f fastq SN4570284.fq.gz

Save the above script in a file called ‘fastqc.sh’ and submit it to SLURM using a sbatch command as below.

[tannistha.nandi@arc ~]$ sbatch fastqc.sh Submitted batch job 9658868

The runtime of job id 9658868 is ~46 minutes on a single core with a memory utilization of ~286 MB of RAM.

| Job name | Header text |

|---|---|

| # files | 1 |

| File size | 33GB |

| # cores | 1 |

| CPU efficiency | 99.28% |

| Run time | 00:46:13 |

| Memory utilized | 285.46 MB |